Search

Recent comments

- GG bryce....

2 hours 51 min ago - back in 2008....

3 hours 1 min ago - bibi's bondi.....

3 hours 5 min ago - strained grid....

4 hours 49 min ago - blackened files....

6 hours 50 min ago - BBCrap.....

6 hours 53 min ago - no mention....

11 hours 7 min ago - detached from reality.....

14 hours 15 min ago - marcroon.....

17 hours 3 min ago - pushed....

17 hours 8 min ago

Democracy Links

Member's Off-site Blogs

people will believe the worst...

As the world becomes more complex and governments everywhere struggle, trust in the internet is more important today than ever.

The internet is our shared space. It helps us connect. It spreads opportunity. It enables us to learn. It gives us a voice. It makes us stronger and safer together.

To keep the internet strong, we need to keep it secure. That's why at Facebook we spend a lot of our energy making our services and the whole internet safer and more secure. We encrypt communications, we use secure protocols for traffic, we encourage people to use multiple factors for authentication and we go out of our way to help fix issues we find in other people's services.

The internet works because most people and companies do the same. We work together to create this secure environment and make our shared space even better for the world.

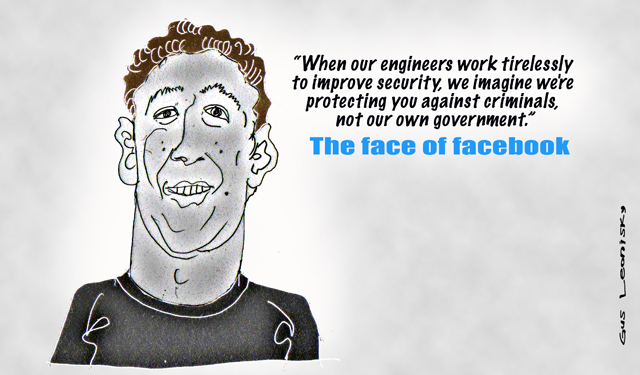

This is why I've been so confused and frustrated by the repeated reports of the behavior of the US government. When our engineers work tirelessly to improve security, we imagine we're protecting you against criminals, not our own government.

The US government should be the champion for the internet, not a threat. They need to be much more transparent about what they're doing, or otherwise people will believe the worst.

I've called President Obama to express my frustration over the damage the government is creating for all of our future. Unfortunately, it seems like it will take a very long time for true full reform.

So it's up to us -- all of us -- to build the internet we want. Together, we can build a space that is greater and a more important part of the world than anything we have today, but is also safe and secure. I'm committed to seeing this happen, and you can count on Facebook to do our part.

https://www.facebook.com/zuck/posts/10101301165605491?stream_ref=10

- By Gus Leonisky at 14 Mar 2014 - 9:57pm

- Gus Leonisky's blog

- Login or register to post comments

a social weak link...

Facebook has received criticism on a wide range of issues, including its treatment of its users, online privacy, child safety, hate speech, and the inability to terminate accounts without first manually deleting the content. In 2008, many companies removed their advertising from the site because it was being displayed on the pages of individuals and groups they found controversial. The content of some user pages, groups, blogs, and forums has been criticized for promoting or dwelling upon controversial and often divisive topics (e.g., politics, religion, sex, etc.). There have been several censorship issues, both on and off the site.

In the lifespan of its service, Facebook has made many changes that directly impact its users, and their changes often result in criticism. Of particular note are the new user interface format launched in 2008, and the changes in Facebook's Terms of Use, which removed the clause detailing automatic expiry of deleted content. Facebook has also been sued several times.[1]

On August 19, 2013, it was reported that a Facebook user from Palestinian Autonomy Khalil Shreateh found a bug that allowed him to post material to other users' Facebook Walls. Users are not supposed to have the ability to post material to the Facebook Walls of other users unless they are approved friends of those users that they have posted material to. To prove that he was telling the truth, Shreateh posted material to Sarah Goodin's wall, a friend of Facebook CEO Mark Zuckerberg. Following this, Shreateh contacted Facebook's security team with the proof that his bug was real, explaining in detail what was going on. Facebook has a bounty program in which it compensates people a $500+ fee for reporting bugs instead of using them to their advantage or selling them on the black market. However, it was reported that instead of fixing the bug and paying Shreateh the fee, Facebook originally told him that "this was not a bug" and dismissed him. Shreateh then tried a second time to inform Facebook, but they dismissed him yet again. On the third try, Shreateh used the bug to post a message to Mark Zuckerberg's Wall, stating "Sorry for breaking your privacy ... but a couple of days ago, I found a serious Facebook exploit" and that Facebook's security team was not taking him seriously. Within minutes, a security engineer contacted Shreateh, questioned him on how he performed the move and ultimately acknowledged that it was a bug in the system. Facebook temporarily suspended Shreateh's account and fixed the bug after several days. However, in a move that was met with much public criticism and disapproval, Facebook refused to pay out the 500+ fee to Shreateh; instead, Facebook responded that by posting to Zuckerberg's account, Shreateh had violated one of their terms of service policies and therefore "could not be paid." Included with this, the Facebook team strongly censured Shreateh over his manner of resolving the matter. In closing, they asked that Shreateh continue to help them find bugs.

http://en.wikipedia.org/wiki/Criticism_of_Facebook

limited intelligence...

United States president Barack Obama has met with bosses from Facebook, Google and other internet giants to discuss plans to overhaul the surveillance practices of America's spy agencies.

Those attending included Google's executive chairman Eric Schmidt and Facebook founder Mark Zuckerberg, who says he last week called the president personally to express frustration with the vast online intelligence dragnets.

A White House official says the meeting is part of Mr Obama's continuing dialogue on the issues of privacy, technology and intelligence.

"The president reiterated his administration's commitment to taking steps that can give people greater confidence that their rights are being protected, while preserving important tools that keep us safe," the White House said.

But Mr Zuckerberg, a public critic of government data gathering practices, says more needs to be done.

"While the US government has taken helpful steps to reform its surveillance practices, these are simply not enough," he said through a spokesperson.

"People around the globe deserve to know that their information is secure and Facebook will keep urging the US government to be more transparent about its practices and more protective of civil liberties," he said.

Some of the largest US technology companies, including Google, its rival Yahoo, social networking site Twitter and others, have been pushing for more transparency, oversight and restrictions to the US government's gathering of intelligence.

http://www.abc.net.au/news/2014-03-22/obama-meets-with-facebook2c-google-bosses-over-internet-survei/5338694

loosing business....

IBM is spending more than a billion dollars to build data centers overseas to reassure foreign customers that their information is safe from prying eyes in the United States government.

And tech companies abroad, from Europe to South America, say they are gaining customers that are shunning United States providers, suspicious because of the revelations by Edward J. Snowden that tied these providers to the National Security Agency’s vast surveillance program.

Even as Washington grapples with the diplomatic and political fallout of Mr. Snowden’s leaks, the more urgent issue, companies and analysts say, is economic. Technology executives, including Mark Zuckerberg of Facebook, raised the issue when they went to the White House on Friday for a meeting with President Obama.

It is impossible to see now the full economic ramifications of the spying disclosures — in part because most companies are locked in multiyear contracts — but the pieces are beginning to add up as businesses question the trustworthiness of American technology products.

http://www.nytimes.com/2014/03/22/business/fallout-from-snowden-hurting-bottom-line-of-tech-companies.html?hp&_r=0

facebuck...

Facebook founder Mark Zuckerberg earned $3.3bn (£1.9bn) on the sale of share options in 2013, a new regulatory filing has revealed.

Mr Zuckerberg has now exhausted his supply of stock options as a result of Facebook's public offering.

He was given 60 million shares to help him with his tax bill.

His base salary for 2013 fell to $1, like other tech leaders such as Google's Larry Page and former Apple boss Steve Jobs.

However, his total compensation for the year was $653,165, down from $1.99m in 2012.

Facebook said the majority of that was to pay for flights on private jets, which are seen as necessary for security reasons.

Mr Zuckerberg still owns 426.3 million Facebook shares, which are worth around $25.7bn.

Shares in the social networking giant have more than doubled in value over the past year, as Facebook has reported better than expected earnings due to its strong mobile ad sales.

http://www.bbc.com/news/business-26830388

no idea what all this switcheroo means...

In a global rollout from today, Facebook will start removing the message function from its mobile app for iOS and Android and instead require users to install its standalone Messenger app, which, it says, is "fast and reliable."

Facebook is about to eliminate the message feature of its mobile app, pushing its users to install the company’s standalone app Messenger instead, TechCrunch reports.

The company has begun sending out notifications to users in Europe saying that the message service will disappear from Facebook’s main mobile app for iOS and Android in about two weeks.

“We have built a fast and reliable messaging experience through Messenger and now it makes sense for us to focus all our energy and resources on that experience,” the company said in a statement Wednesday, Reuters reports.

Users in a handful of European countries, including England and France, will be the first users forced to download the Messenger app, but eventually users in all countries will see the message service in the main app disappear, spokesman Derick Mains said to Reuters.

read more: http://time.com/57019/facebook-messaging-messenger-app/

emotional contagion is not new...

There are two interesting lessons to be drawn from the row about Facebook's "emotional contagion" study. The first is what it tells us about Facebook's users. The second is what it tells us about corporations such as Facebook.

In case you missed it, here's the gist of the story. The first thing users of Facebook see when they log in is their news feed, a list of status updates, messages and photographs posted by friends. The list that is displayed to each individual user is not comprehensive (it doesn't include all the possibly relevant information from all of that person's friends). But nor is it random: Facebook's proprietary algorithms choose which items to display in a process that is sometimes called "curation". Nobody knows the criteria used by the algorithms – that's as much of a trade secret as those used by Google's page-ranking algorithm. All we know is that an algorithm decides what Facebook users see in their news feeds.

So far so obvious. What triggered the controversy was the discovery, via the publication of a research paper in the prestigious Proceedings of the National Academy of Sciences that for one week in January 2012, Facebook researchers deliberately skewed what 689,003 Facebook users saw when they logged in. Some people saw content with a preponderance of positive and happy words, while others were shown content with more negative or sadder sentiments. The study showed that, when the experimental week was over, the unwitting guinea-pigs were more likely to post status updates and messages that were (respectively) positive or negative in tone.

Statistically, the effect on users was relatively small, but the implications were obvious: Facebook had shown that it could manipulate people's emotions! And at this point the ordure hit the fan. Shock! Horror! Words such as "spooky" and "terrifying" were bandied about. There were arguments about whether the experiment was unethical and/or illegal, in the sense of violating the terms and conditions that Facebook's hapless users have to accept. The answers, respectively, are yes and no because corporations don't do ethics and Facebook's T&Cs require users to accept that their data may be used for "data analysis, testing, research".

Facebook's spin-doctors seem to have been caught off-guard, causing the company's chief operating officer, Sheryl Sandberg, to fume that the problem with the study was that it had been "poorly communicated". She was doubtless referring to the company's claim that the experiment had been conducted "to improve our services and to make the content people see on Facebook as relevant and engaging as possible.

read more: http://www.theguardian.com/technology/2014/jul/06/we-shouldnt-expect-facebook-to-behave-ethically

tempora spies on your facebook...

A tribunal is to hear a legal challenge by civil liberty groups against the alleged use of mass surveillance programmes by UK intelligence services.

Privacy International and Liberty are among those to challenge the legality of alleged "interception, collection and use of communications" by agencies.

It follows revelations by the former US intelligence analyst Edward Snowden about UK and US surveillance practices.

The UK government says interception is subject to strict controls.

The case - also brought by Amnesty International and the American Civil Liberties Union and other groups - centres on the alleged use by UK intelligence and security agencies of a mass surveillance operation called Tempora.

The UK government has neither confirmed nor denied the existence of the operation.

But documents leaked by whistleblower Mr Snowden and published in the Guardian newspaper claimed the existence of Tempora, which the paper said allowed access to the recordings of phone calls, the content of email messages and entries on Facebook.

http://www.bbc.com/news/uk-28286105

See toon at top and article below it...

real spooks on facebook...

Facebook, the world’s top social media platform, is reportedly seeking to hire hundreds of employees with US national security clearance licenses.

Purportedly with the aim of weeding out “fake news” and “foreign meddling” in elections.

If that plan, reported by Bloomberg, sounds sinister, that’s because it is. For what it means is that people who share the same worldview as US intelligence agencies, the agencies who formulate classified information, will have a direct bearing on what millions of consumers on Facebook are permitted to access.

It’s as close to outright US government censorship on the internet as one can dare to imagine, and this on a nominally independent global communication network. Your fun-loving place “where friends meet.”

Welcome to Facespook!As Bloomberg reports: “Workers with such [national security] clearances can access information classified by the US government. Facebook plans to use these people – and their ability to receive government information about potential threats – in the company’s attempt to search more proactively for questionable social media campaigns ahead of elections.”

A Facebook spokesman declined to comment, but the report sounds credible, especially given the context of anti-Russia hysteria.

Over the past year, since the election of Donald Trump as US president, the political discourse has been dominated by “Russia-gate” – the notion that somehow Kremlin-controlled hackers and news media meddled in the election. The media angst in the US is comparable to the Red Scare paranoia of the 1950s during the Cold War.

Facebook and other US internet companies have been hauled in front of Congressional committees to declare what they know about alleged “Russian influence campaigns.” Chief executives of Facebook, Google, and Twitter, are due to be questioned again next month by the same panels.

read more:

https://www.rt.com/op-edge/407078-facebook-social-media-intelligence-cen...

that unsocial shit on facebook is you...

Palihapitiya’s comments last month were made one day after Facebook’s founding president, Sean Parker, criticized the way that the company “exploit[s] a vulnerability in human psychology” by creating a “social-validation feedback loop” during an interview at an Axios event.

Parker had said that he was “something of a conscientious objector” to using social media, a stance echoed by Palihapitaya who said that he was now hoping to use the money he made at Facebook to do good in the world.

“I can’t control them,” Palihapitaya said of his former employer. “I can control my decision, which is that I don’t use that shit. I can control my kids’ decisions, which is that they’re not allowed to use that shit.”

He also called on his audience to “soul search” about their own relationship to social media. “Your behaviors, you don’t realize it, but you are being programmed,” he said. “It was unintentional, but now you gotta decide how much you’re going to give up, how much of your intellectual independence.”

read more:

https://www.theguardian.com/technology/2017/dec/11/facebook-former-execu...

facebook: we can’t promise we won’t destroy democracy...

SAN FRANCISCO — Facebook Inc. warned Monday it could offer no assurance that social media was on balance good for democracy, but the company said it was trying what it could to stop alleged meddling in elections by Russia or anyone else.

The sharing of false or misleading headlines on social media has become a global issue, after accusations that Russia tried to influence votes in the United States, Britain and France. Moscow denies the allegations.

Facebook, the largest social network with more than 2 billion users, addressed social media’s role in democracy in blog posts from a Harvard University professor, Cass Sunstein, and from an employee working on the subject.

“I wish I could guarantee that the positives are destined to outweigh the negatives, but I can‘t,” Samidh Chakrabarti, a Facebook product manager, wrote in his post.

Facebook, he added, has a “moral duty to understand how these technologies are being used and what can be done to make communities like Facebook as representative, civil and trustworthy as possible.”

Contrite Facebook executives were already fanning out across Europe this week to address the company’s slow response to abuses on its platform, such as hate speech and foreign influence campaigns.

Read more:

https://nypost.com/2018/01/22/facebook-we-cant-promise-we-wont-destroy-d...

Read from top...

fuckbook...

The UK's Information Commissioner says she will seek a warrant to look at the databases and servers used by British firm Cambridge Analytica.

The company is accused of using the personal data of 50 million Facebook members to influence the US presidential election in 2016.

Its executives have also been filmed by Channel 4 News suggesting it could use honey traps and potentially bribery to discredit politicians.

The company denies any wrongdoing.

Fresh allegationsOn Monday, Channel 4 News broadcast hidden camera footage in which Cambridge Analytica chief executive Alexander Nix appears to suggest tactics his company could use to discredit politicians online.

In the footage, asked what "deep digging" could be done, Mr Nix told an undercover reporter: "Oh, we do a lot more than that."

He suggested one way to target an individual was to "offer them a deal that's too good to be true and make sure that's video recorded".

He also said he could "send some girls around to the candidate's house..." adding that Ukrainian girls "are very beautiful, I find that works very well".

Mr Nix continued: "I'm just giving you examples of what can be done and what has been done."

Channel 4 News said its reporter had posed as a fixer for a wealthy client hoping to get a political candidate elected in Sri Lanka.

However, Cambridge Analytica said the report had "grossly misrepresented" the conversations caught on camera.

"In playing along with this line of conversation, and partly to spare our 'client' from embarrassment, we entertained a series of ludicrous hypothetical scenarios," the company said in a statement.

"Cambridge Analytica does not condone or engage in entrapment, bribes or so-called 'honeytraps'," it said.

Mr Nix told the BBC's Newsnight programme that he regarded the report as a "misrepresentation of the facts" and said he felt the firm had been "deliberately entrapped".

Read more:

http://www.bbc.com/news/technology-43465700

Read from top

no influence whatsoever on the morons...

Mark Zuckerberg has admitted Facebook "made mistakes" in protecting users' data and has announced a suite of changes to the social network in the wake of the Cambridge Analytica scandal.

Key points:He says Facebook will now impose stricter rules on developers of third-party apps that collect your data, and will create a new section in your News Feed where you can review those that you use.

"There's more to do, and we need to step up and do it," he said in his first statement on the matter this morning.

Facebook suspended the London-based political research company Cambridge Analytica last week over allegations that it kept improperly obtained user data after telling the social media giant it had been deleted.

The data was reportedly collected by University of Cambridge psychology academic Aleksandr Kogan via a survey app on Facebook years ago, and then passed onto Cambridge Analytica, which used it to target people with political advertising during the 2016 US election campaign.

Read more:

http://www.abc.net.au/news/2018-03-22/facebook-mark-zuckerberg-admits-mi...

a social pox for sale...

The proximate cause is the Cambridge Analytica controversy. In violation of Facebook’s rules, the Trump-linked political consultancy schemed to get access to the data of 87 million users. This has made Facebook a scapegoat for Trump’s victory on par with the Russians and James Comey (at least before the FBI director got fired and became a Trump adversary).

In 2012, Barack Obama’s re-election campaign did a less-underhanded version of the same thing as Cambridge. The great chronicler of the Obama digital operation, Sasha Issenberg, wrote of how its “ ‘targeted sharing’ protocols mined an Obama backer’s Facebook network in search of friends the campaign wanted to register, mobilize, or persuade.”

No scandal ensued — rather, the Obama digital mavens were hailed as geniuses who changed campaigning forever.

It’s not Zuckerberg’s fault that he has suddenly been deemed on the wrong side of history, but the Cambridge Analytica blow-up is bringing a useful spotlight on the most sanctimoniously self-regarding large company in America.

Facebook can’t bear to admit that it has garnered the largest collection of data known to man to sell ads against and line the pockets of its founder and investors.

The problem isn’t that Zuckerberg is a businessman, and an exceptionally gifted one, but that he pretends to have stumbled out of the lyrics of John Lennon’s song “Imagine.” To listen to him, Facebook is all about connectivity and openness — he just happens to have made roughly $63 billion as the T-shirt-wearing champion of “the global community,” whatever that means.

It’s this pose that makes him and other Facebook officials sound so shifty. In a rocky interview with Savannah Guthrie of “The Today Show” last week, Sheryl Sandberg was asked what product Facebook sells. “We’re selling the opportunity to connect with people,” she said according to The Washington Post, before catching herself, “but it’s not for sale.”

Something or other must be for sale, or Facebook is the first company to rocket to the top ranks of corporate America based on having no product or profit motive. Guthrie, persisting, stated that Facebook sweeps up data for the use of advertisers. Sandberg objected, “We are not sweeping data. People are inputting data.”

Uh, yeah. That’s the genius of it. In a reported exchange with a friend while he was a student at Harvard, Zuckerberg boasted of having data on thousands of students because “people just submitted it.”

Read more:

https://nypost.com/2018/04/09/facebook-has-always-been-one-big-swindle/

moreshit

Facebook CEO Mark Zuckerberg has begun a two-day congressional inquisition declaring his company is facing an "arms race" with Russia as foreign actors seek to interfere in elections.

Key points:Mr Zuckerberg told members of the Senate Judiciary and Commerce committees his company was trying to change in light of recent criticism.

"We've deployed new AI tools that do a better job of identifying fake accounts that may be trying to interfere in elections or spread misinformation," he said.

"There are people in Russia whose job it is to try to exploit our systems and other internet systems … so this is an arms race.

"They're going to keep on getting better at this and we need to invest in keeping on getting better at this too."

Amid concern from US politicians that Russia and other foreign powers will try to meddle in upcoming midterm elections, Mr Zuckerberg said security was being stepped up.

"We're going to have more than 20,000 people by the end of this year working on security and content review across the company," he said.

Read more shit from Zuck at:

http://www.abc.net.au/news/2018-04-11/facebook-boss-mark-zuckerberg-fron...

What a lot of shit from Zuck... It's like a shipbuilder blaming the rivetboy for the sinking of the Titanic... Let's "face it", Facebook is easily opened like a tin of sardines with a pop-up lid.

Read from top...

lawsuit...

State Attorney General Bob Ferguson sued Facebook and Google on Monday, saying they have not produced documents requested by the media and members of the public related to political ads for local and state elections since 2013.

“We can’t have a world here in Washington State where we’re transparent on radio buys, we’re transparent on TV buys, but we’re not transparent when it comes to ads on Facebook and Google,” Ferguson told The Stranger, a Seattle newspaper. “That’s not okay.”

The lawsuit is apparently not related to the claims of 'Russian online interference' in the 2016 US presidential election, though Ferguson called them a “backdrop” showing how important transparency was. Instead, the case relates to the state and local laws adopted in the 1970s, long before Google or Facebook were in existence.

Washington State Initiative 276, passed in 1972, includes the section called “Commercial Advertisers' Duty to Report.” A nearly identical ordinance was passed in 1977 by the City of Seattle. The definitions of “commercial advertiser” and “political advertising” in the laws are so broad, the authorities say, they apply to digital platforms that have since been established, such as Google and Facebook.

Facebook has sought to deflect criticism over the so-called “dark posts” and other advertising, by creating a registry of ads with political content. Even that falls short of the reporting requirements in Seattle and Washington, however, as the laws require the documents to be “open for public inspection,” rather than just Facebook users.

Read more:

https://www.rt.com/usa/428722-facebook-google-sued-seattle/

Read from top.

but you still cannot show tits...

Mark Zuckerberg denied that he defended the rights of Holocaust deniers on Wednesday — in an attempt to clarify earlier comments he made on a podcast.

Zuckerberg gave the explanation to Recode after the site aired audio of the Facebook founder claiming “abhorrent” content has a right to spread across his massive social media network.

His response called the issue of censoring Facebook trolls “challenging.”

“I personally find Holocaust denial deeply offensive, and I absolutely didn’t intend to defend the intent of people who deny that,” Zuckerberg told the website.

“Of course if a post crossed a line into advocating for violence or hate against a particular group, it would be removed… These issues are very challenging but I believe that often the best way to fight offensive bad speech is with good speech.”

In his earlier remarks, the Facebook founder said that although Holocaust denial was “deeply offensive” it should not be removed from the website.

“At the end of the day, I don’t believe that our platform should take that down because I think there are things that different people get wrong,” he said.

Read more:

https://nypost.com/2018/07/18/zuckerberg-attempts-to-clarify-holocaust-d...

twittenberg to do editing of news humanly...

Mark Zuckerberg is considering hiring human “editors” to hand-pick “high-quality news” to show Facebook users in an effort to combat fake news — and no, it’s not an April Fool’s joke.

In his ongoing quest to satisfy the political censorship demands of Western governments, Zuckerberg told German publishing house Axel Springer that he is considering the introduction of a dedicated news section for the social media platform, which would potentially use humans to curate the news from “broadly trusted” outlets. Zuckerberg said Facebook might also start paying news publishers to include their articles in this dedicated news section in an effort to reward “high-quality, trustworthy content.”

With social media censorship already at worryingly high levels, who will decide which outlets are “broadly trusted” and which are untrustworthy? What qualifies one outlet as more “trusted” than another? Will Zuckerberg make the criteria public?

Read more:

https://www.rt.com/news/455306-facebook-curate-news-feed/

Read from top.

going on another digitrack...

Mark Zuckerberg now says he would rather have government set standards than try to do so himself.So Apple, too, wants to stream everything to you. The company has created Apple TV+ to compete with Netflix, Amazon Prime, and all the other streaming outfits. In addition, it wants to stream music, the printed word, and even games.

These days, it seems that everyone but the Amish are living their lives online. That is, they go there to find not just news and entertainment, not just e-commerce, but also digital assistance (Siri and Alexa), physical assistance (Task Rabbit, Fiverr), and transportation (Uber and Lyft). Why, there’s even Meural, which brings fine art into the home—digitally, for a monthly fee. And in each instance, of course, the digital company knows exactly what you’ve bought, done, or otherwise been up to.

Indeed, even those who don’t use any of these services are still Netizens, through their credit cards, their smartphones, their web browsers, their cable TV subscriptions, and other digital devices, including security gadgets in their homes, GPS and black boxes in their cars, security cameras in public spaces, keystroke monitors in the workplace, and the greatest data grab of them all, the Internet of Things.

Come to think of it, even the Amish, seemingly pastoral in their analog autonomy, can’t truly stay off the grid. They’re being tracked any time they interact with the government, which in turn puts their data, such as it is, in the same bucket as everyone else’s.

All this digital subsuming, of course, is legal. As for what’s illegal, just in 2018, the top 10 data breaches affected 2.5 billion people, or at least that many accounts.

Speaking of illegality so common as to barely be newsworthy, we might consider the little-noticed case of Harold Thomas Martin, the former National Security Agency employee who just pled guilty to stealing some 50 terabytes of data from the NSA over two decades. (A terabyte is 1,000 gigabytes, if that helps.)

Interestingly, Martin doesn’t seem to have done anything with the data. Unlike his former NSA colleague, Edward Snowden, Martin seems to have been content to simply hoard it, packrat-style. And so it’s also interesting that Martin—who obviously has had mental health issues—managed to get and keep multiple security clearances over the years, even as he was piling up a stash 300 times larger than Snowden’s. As one expert observed: “What has changed over the past several decades or so is that the scale of the breaches has increased tremendously. Instead of individual secrets being compromised, it often turns out that entire databases or libraries of information.”

Such is the power of digitalization, sweeping away all those analog islands of privacy, making it easy to move whole Alexandria Libraries with a click of the mouse.

So we can ask: if even the NSA can’t adequately police its data, what are the chances that the average data company hiring temps and other nomads—here in the U.S. and in scores of countries around the world—is keeping its data in good order?

In fact, data are so sloshy that one is tempted to say that these days a data leak is comparable to seawater flowing from the North Atlantic to the South Atlantic. Given the adjacency, what did you expect?

Of course, it can certainly be argued that most people don’t truly care about their privacy—or perhaps have simply given up. Moreover, it appears that many people, especially the young, see “sharing” as part of the digital deal.

For instance, there’s Venmo, the e-payment system that’s part of Paypal. According to a report in Gizmodo last year, its users didn’t seem to understand that they were “sharing” their transaction histories not just with their friends, but with the entire internet. Or maybe users did know and they were fine with such disclosure. Perhaps, in the spirit of Andy Warhol, they were happy to think their digital lives would be famous for 15 minutes. In any case, Venmo is still in business.

Yet at the same time, there’s been plenty of pushback from the political system. That is, for reasons best explained by political scientists—it often goes under the name of social choice theory—personal choices are not the same as political choices. So even those whose personal actions bespeak a laissez-faire attitude toward privacy might nonetheless be militant about it in the political sphere.

On that score, the hottest digital button these days is Facebook. Looking back on the company’s last decade of tumultuous growth, one might conclude that while it was okay for the 2008 Obama campaign to use Facebook to access everyone’s information, it was not okay for the 2016 Trump campaign to do so.

Yet for a while there, Facebook’s Mark Zuckerberg didn’t seem to understand that the ’16 election was being viewed differently from the ’08 election. Immediately after Trump’s victory, Zuck insisted that there was nothing to worry about. Yet within a year, he acknowledged the need to do something. Furthermore, in April 2018, he found himself testifying on Capitol Hill, touting his new commitment to good digital citizenship.

In fact, Facebook had by then launched a fascinating political science experiment of its own—a worldwide instant shadow government. That is, it hired lawyers to determine appropriate speech codes, and then it contracted with tens of thousands of low-paid content monitors to enforce those codes.

In other words, it was as if all the arguments about speech ever made anywhere—free speech, hate speech, speech that enlightens, speech that befogs—were summarized and then crammed into a thumb drive, so that mostly young people, consuming lots of energy drinks and pizza, could dope out appropriate rules for the planet’s digital discourse. (In a way, the nation-building hubris of the Coalition Provisional Authority in Iraq 15 years ago—all that planning for a better world—was weirdly recaptured in conference rooms in Menlo Park, California. Happily for them, Facebookers were spared the bloody folly that ensued.)

Still, nobody was happy with Facebook’s effort, and so now, Zuckerberg—a man known for his imperial ambition—is backing away from the once-beaconing vision of globe-girdling enforcement. Instead, he’s now anchoring himself in more realistic political wisdom. In particular, he wants to hand off the problem to others, taking the onus off his social network. That revised goal was clear enough in a March 30 op-ed in The Washington Post, in which Zuckerberg called for help on a number of issues, including content monitoring:

One idea is for third-party bodies to set standards governing the distribution of harmful content and to measure companies against those standards. Regulation could set baselines for what’s prohibited and require companies to build systems for keeping harmful content to a bare minimum.

So let’s recap. Once upon a time—that is, just a little more than two years ago—Facebook said there was no problem. Then it said that there was a problem, and that it was being handled. Now Facebook is saying that it wants third parties to handle the problem. So here’s a cinchy prediction: those third parties will turn out to be governments, starting with the U.S. government—and that will be just dandy with Facebook.

The idea that companies actively want to be regulated is a familiar one. A half-century ago, economic historian Gabriel Kolko published a classic text, The Triumph of Conservatism: A Reinterpretation of American History, 1900-1916, which argued that it was the political progressives of the era who inspired the creation of the Federal Trade Commission, which, in Kolko’s view, was a conservative—as in status quo-oriented—bureaucracy.

Read more:

https://www.theamericanconservative.com/articles/facebook-is-thrilled-un...

Read from top.

we can't make a cesspool smell better by introducing more turds.

From TAC (https://www.theamericanconservative.com/articles/drowning-in-the-internets-political-sewers/)

Progressives have made a valiant bid for control of the internet’s political sewers. Alas, their memes were never as funny and their fake news was never as convincing. #NotMyPresident just isn’t as viscerally satisfying as #LockHerUp. So the Left is doing what any zealot would do: if they can’t win the game, they’ll at least try to bring down the stadium. Thus did Elizabeth Warren write in a (slightly hypocritical) Facebook ad:

Facebook and Google account for 70% of all internet traffic – if we didn’t run ads on Facebook, like this one, we wouldn’t be able to get our message out around the country. But that’s not how the internet should work. We need to break up the three big tech companies that dominate the internet, stifle competition, and influence how our democracy works. But it’s going to take a grassroots movement to get it done. So if you’re with me, then join us today.

Now, I’m 100 percent behind Senator Warren’s war on Silicon Valley. But what does she expect the way of “competition”? Destroying Google would empower Bing and Yahoo, which are owned by Microsoft and Verizon, respectively. (They’re also garbage.) And what does she imagine will succeed the social media giants? A more open-concept Facebook? That’s Twitter. A less politicized Twitter? That’s Facebook. Twitter and Facebook, but with pictures instead of words? That’s Instagram.

The point of competition is to produce a better product, but it doesn’t get any better than this. In fact, it only gets worse. Because the problem with the internet isn’t the lack of competition—the internet is the problem. Don’t matter how many times you slice the pie: it’s still filled with pig anuses and battery acid.

I went into all of this in greater detail in my recent Luddite manifesto, but it bears repeating: social media acts like a poker machine. It creates what are called ludic loops: “repeated cycles of uncertainty, anticipation and feedback—and the rewards are just enough to keep you going.”

These sites change your brain chemistry to produce psychological cravings. For most, the “reward” for putting another coin in the slot (racking up likes on a selfie, for instance) produces a rush like cocaine. For politics junkies, it’s much more like road rage. We get high off the anger and hatred. We lose our capacity for empathy.

“That’s not how the internet should work either,” Senator Warren might retort. Sure. And maybe war oughtn’t to be about killing people. Maybe it ought to be about sitting under oak trees and feeding each other strawberries until we sort out our problems using words. But whatever you want to call that conflict resolution process, it isn’t war. And whatever you want to call that place—the place devoid of rageaholics getting whipped up over fake news and laughing at vulgar, nihilistic memes—it isn’t the internet.

For one, that’s how Facebook and Twitter make their money. Anyone who wants to curtail the ludic loop mechanisms on social media would also have to ban gambling. (I’m game.) More importantly, people like splashing around in the raw sewage that bubbles up on social media. If folks wanted nuanced, thoughtful content on their newsfeed, The American Conservative would have two billion subscribers. But they don’t. The people want sensationalist, hyper-partisan garbage that can be reduced to snide, 280-character tweets, including at least 16 exclamation points and/or question marks. That’s why Facebook has two billion users.

Senator Warren’s beef is really with human beings and the stupid decisions we make. So she’s doing what any socialist would do: take away our ability to make decisions. She’s going to use government regulation to overcome human frailty. She’ll utilize the coercive power of the state to create a new, better internet that’s suited exactly to her own liking.

But what Senator Warren and her comrades envision can’t be accomplished by a bureaucrat. Only an alchemist could transmute this titanic dumpster fire we call the World Wide Web into some kind of Plato’s Academy, tastefully decorated with pictures of cats and populated exclusively by plus-sized disabled LGBTQ people of color.

The internet is what it is, and it can only get worse from here. If you don’t like it, don’t use it. And you shouldn’t. So don’t.

Michael Warren Davis is associate editor of the Catholic Herald. Find him at www.michaelwarrendavis.com.

And is our elevated pseudo-Aristophanes satire why yourdemocracy.net.au does not have a single suscriber?...

-----------------------

Just look at our politicians in every town:

When they are poor they behave properly,

But after they’ve fleeced the treasury and waxed wealthy

They change their tune,

Undermining democracy and turning against the people.

-------

It’s very like the way we treat our money

The noble silver drachma, that of old

We were so proud of, and the recent gold,

Coins that rang true, clean-stamped and worth their weight

Throughout the world, have ceased to circulate.

Instead the purses of Athenian shoppers

Are full of shoddy silver-plated coppers...

-----------------

And not long ago, didn’t we all swear

That the two-and-a-half percent tax proposed by Heurippides

Would yield the state five hundred talents? And immediately

Wasn’t Heurippides our darling golden boy,

Till we looked into the matter more closely

And saw that the whole thing was a damn fantasy,

Impossible to realize?

Read from top.

Gus is a rabid fierce atheist.

See also: Lucian of Samosata in

http://www.yourdemocracy.net.au/drupal/node/35062

in the billionaire cattle-class...

Hitting back against presidential candidate Bernie Sanders’s assertion that billionaires should not exist – and his calls to tax their wealth at much higher rates – Facebook CEO Mark Zuckerberg, worth $70bn, took to Fox News to defend his beleaguered class. Billionaires, he argued, should not exist in a “cosmic sense,” but in reality most of them are simply “people who do really good things and kind of help a lot of other people. And you get well compensated for that.” He warned too about the dangers of ceding too much control over their wealth to the government, allegedly bound to stifle innovation and competition and “deprive the market” of his fellow billionaires’ funding for philanthropy and scientific research.

“Some people think that, okay, well the issue or the way to deal with this sort of accumulation of wealth is, ‘Let’s just have the government take it all,” Zuckerberg said. “And now the government can basically decide, you know, all of the medical research that gets done.” What he didn’t mention is that Sanders’s tax would cost him $5.5bn in its first year.

Zuckerberg’s reasoning isn’t unique among the 1%, especially in Silicon Valley: people with outrageous wealth have earned it through their own cunning, creating a vital service for the world that furthers the common good. Their success, this myth tells us, is a testament to their ability to divine what’s best for society and bring it into existence; their fortunes are commensurate to their genius. Philanthropy, as such, isn’t just an alternative to taxing them more but far preferable. After all, what could some collection of nameless, faceless bureaucrats know better than a man – and they’re usually men – who has built such vast wealth? Vital innovation, Zuckerberg threatens, will only happen if you’re nice enough to him and his rich friends.

As common as this argument is, it also happens not to be true. Take the basis of Mark Zuckerberg’s fortune. The internet was developed out of a small Pentagon network intended to allow the military to exchange information during the Cold War. In her book The Entrepreneurial State, economist Mariana Mazzucato shows that iPhones – the ones that Facebook skims prolific amounts of data off of to sell to the highest bidder – are in large part a collection of technologies created by various state agencies, cobbled together by Apple into the same sleek case.

Instead of leading the way to improve health outcomes, the quest for profits in medicine has led drug companies to produce products just different enough from those of their competitors to patent, effectively allowing these firms collect rent from the sick. And of the top 88 innovations rated by R&D Magazine as the most important between 1971 and 2006, economists Fred Block and Matthew Keller have found that 77 were the beneficiaries of substantial federal research funding, particularly in early stage development. “If one is looking for a golden age in which the private sector did most of the innovating on its own without federal help,” they write, “one has to go back to the era before World War II.” Along the same timeline that the right has dragged the reputation of the public sector, it has only become more central to progress the private sector has taken credit for.

Let’s also not forget about the myriad boondoggles pumped out of Silicon Valley in the last decade, from Theranos to the Fyre Festival to Juicero. The recent implosion of the real estate company WeWork – backed heartily by SoftBank and JP Morgan Chase, and losing one dollar for every dollar it makes – should cast some doubt on the supposed genius of the private sector to overcome society’s most pressing challenges, or even to pick winners. Besides government funding, too, most wunderkind tech companies are propped up by armies of typically underpaid workers, whether they’re driving Ubers, mining the rare earth minerals needed for smartphones under brutal working conditions or watching hour after hour of grisly videos to moderate them out of our Facebook timelines.

This isn’t all to say that the private sector hasn’t played a significant role in driving innovation; someone needed to design the iPhone, after all. But the the fortunes built off of each couldn’t exist were it not for the government more often than not taking the first step, funding innovation far riskier than venture capitalists and angel investors can usually stomach. “Not only has government funded the riskiest research,” Mazzucato writes, “but it has indeed often been the source of the most radical, path-breaking types of innovation.” The Mark Zuckerbergs of the world, in other words, can make good things happen. But they hardly ever do it alone.

Moreover, billionaires’ extravagant wealth is by and large not spent, as Zuckerberg suggests, on cutting edge research and philanthropic efforts. After they’ve bought up enough yachts and private jets they mainly invest in making themselves richer through casino-style financial speculation and in luxury real estate in starkly unequal cities like San Francisco, Miami and New York, where mostly vacant homes act as safety deposit boxes to shield wealth from taxation. Their money might also end up in tax havens like the Cayman Islands, where it can sit undisturbed by the long arm of the state. Very little of that ever trickles down to the 99%, where inequality has skyrocketed and wages have stagnated.

Zuckerberg’s plea for the billionaire class is above all else deeply anti-democratic, casting doubt on the huddled masses’ ability to decide what’s best for themselves while repeating myths that the public sector is doomed to be wasteful and stagnant. While they could play some role in funding the genuinely cutting edge research coming out of places like ARPA-E and the National Institutes of Health, perhaps the best argument for the kinds of policies Sanders has proposed would be to make it clear that billionaires can’t transcend democracy and call all the shots about what society needs and what it doesn’t. In a truly democratic world – where labor is valued fairly – they wouldn’t exist at all.

Kate Aronoff is a freelance journalist based in New York City

Read more:https://www.theguardian.com/commentisfree/2019/oct/21/mark-zuckerberg-plea-biillionaire-class-anti-democratic

Read from top...

centibillionaire bastards...

Facebook founder Mark Zuckerberg has seen his personal wealth rise to $100bn (£76bn) after the launch of a new short-form video feature.

On Wednesday, Facebook announced the US rollout of Instagram Reels, its rival to controversial Chinese app TikTok.

Facebook shares rose by more than 6% on Thursday. Mr Zuckerberg holds a 13% stake in the company.

He joins Amazon founder Jeff Bezos and Microsoft’s Bill Gates in the exclusive so-called 'Centibillionaire Club'.

Technology bosses have been in the spotlight recently as the size and power of their companies and their personal fortunes continue to grow.

Facebook, Amazon, Apple and Google have been among the biggest benefactors of coronavirus lockdowns and restrictions as more people shop, watch entertainment and socialise online.

Mr Zuckerberg’s personal wealth has gained about $22bn this year, while Mr Bezos's has grown by more than $75bn, according to Bloomberg.

Read more:

https://www.bbc.com/news/business-53689645

Read from top.

And my bank account is barely floating on a sea of hope and charitable borrowings from the other half, enough to buy a few red ned casks in advance, just in case... But one cannot value happiness... Meanwhile Lebanon is suffering more than ever.

no news on facebook? wunderbar!...

Facebook will block Australians from sharing local and international news on Facebook and Instagram if the news media code becomes law, the digital giant has warned of a landmark plan to make digital platforms pay for news content.

The sharing of personal content between family and friends will not be affected and neither will the sharing of news by Facebook users outside of Australia, the social network said.

The mandatory news code has been backed by all the major media companies including News Corp Australia, Nine Entertainment and Guardian Australia, as a way to offset the damage caused by the loss of advertising revenue to Facebook and Google.

See more:

https://www.theguardian.com/media/2020/sep/01/facebook-instagram-threate...

Read from top.

lazy meta......

Facebook pulls plug on ‘News Media Bargaining Code’ – what’s next?

by Kim Wingerei

Meta’s Facebook has declared they’ll stop paying Australian media outlets for content when current agreements expire. It’s sparked the ire of Rupert Murdoch’s News Corp and consternation within the Government. Kim Wingerei asks what may happen next.

The announcement by Meta came as the deals done three years ago are expiring over the next few months. In a joint statement, Communications Minister Michelle Rowland and Assistant Treasurer Stephen Jones called it “a dereliction of its commitment to the sustainability of Australian news media.” Michael Miller, Executive Chairman of News Corp, Australia, thundered in an op-ed,

the shockwaves for Australia, our democracy, economy and way of life, are profound.

For Miller, at least, it may be that dire, the payments from Meta (and Google) are important to News Corp’s profits in Australia, with advertising revenue down 9% in the last quarter alone. The rest of us will probably survive.

ABC’s Managing Director, David Anderson, offered a less dramatic but more practical response, saying, “…this funding is used, amongst other things, to support […] 60 journalists.”

Unlike the ABC, though, the commercial mainstream media recipients of the funding – News Corp, Nine Entertainment and Seven News Media – have never divulged how the revenue from Meta and Google has been used. Given their general decline in revenue and profits, there’s little to indicate it has been invested in staff.

For instance, Nine Entertainment reported 5254 employees at the end of FY2022, and 4753 at the end of FY2023. It is likely that the $50 million or more they receive annually from Meta and Google is used predominantly to prop up their net profit. According to an analyst report from Macquarie Bank, the payments represent 9% of Nine’s net profit.

World-leading reform?The ‘New Media Bargaining Code’ was legislated with much fanfare three years ago. Then Treasurer Josh Frydenberg called it “a world-leading reform,” while mainstream media owners salivated at the prospect of getting a share of the social media giants’ revenue. Seven Media’s Kerry Stokes enthused “the proposed news media bargaining code has resulted in us being able to conclude negotiations that result in fair payment and ensure our digital future.”

It was, however, an unusual bit of law-making. Prefaced by months of posturing from both the Government and from Meta and Google, the final legislation was – allegedly – a result of a meeting between Meta God Mark Zuckerberg and Frydenberg. Australia’s mainstream media companies had long wanted a way to force the social media giants (‘digital platforms’) to pay for the news they produced which was shared on their platforms.

But instead of mandating it, the end result was a code that would only be enforced if the giants refused to pay.

The enforcement mechanism means that the Treasurer can ‘declare’ a digital platform to be designated. If that happens, the designated company would have to negotiate payments in good faith with any media company that wanted to, under the auspices of the Australian Media and Communications Authority (ACMA). If such negotiations fail, the code has provisions for mandatory arbitration.

So far, nobody has been designated. Facebook may well be the first. Incidentally, Google entered into five-year deals, so they have a couple of years to go (except for their deal with News Corp, which was for three years and is up for renewal in the next few months.).

The story so farThe Government will argue that the code has worked because the threat of designation was enough for Meta and Google to enter into payment agreements with more than 30 media companies. Most of the agreements are commercial-in-confidence, so nobody knows for sure the total magnitude of the payments made. Estimates range from $200M to $350M a year, and it is safe to assume that the larger share of that money goes to the ‘big three’ media companies, The Guardian and the ABC.

In a review of the first 18 months of the code’s existence, Treasury declared it a ‘success’. Google had reached ‘at least’ 23 agreements, and Meta 13.

It is notable that non-commercial media outlets such as SBS and The Conversation are among those that have made deals with Google and not with Meta.

Facebook declined to negotiate with The Conversation and SBS, as well as many other quality media companies otherwise eligible under the Code.

In theory, that could have been the trigger for the Treasury to designate Meta and force them to negotiate, but it didn’t.

https://michaelwest.com.au/facebook-dumps-news-media-bargaining-code/

READ FROM TOP

FREE JULIAN ASSANGE NOW....